|

Hi there! I am an MS/PhD student at Cornell University. I am advised by Prof. Zhiru Zhang. I am interested in Electronic Design Automation, Domain-Specific Languages for hardware design and programming, and hardware accelerators.

|

|

|

✨ Selected as LAD'25 Fellow at the International Conference on LLM-Aided Design. (12/16/25) 🏆 Our ISPD 2025 paper Cypress won the Best Paper Award. [Award] (3/19/25) ✨ ARIES nominated for Best Paper Award at FPGA 2025. (03/01/25) 🏆 Our FPGA 2024 paper HLS Formal Verification won the Best Paper Award. [News] (03/05/24) 🏆 Our ACM TRETS 2023 paper RapidLayout won the Best Paper Award. [Award] (07/13/23) Donated my hair to Locks of Love to support children suffering from hair loss. [Certificate] (07/04/23) ✨ Serving Multi-DNN Workloads on FPGAs selected as the Featured Paper of IEEE Trans. on Computers. (05/11/23) Our patent CN109658402B has been granted. [Certificate] [Google Patents] (04/18/23) Selected as DAC Young Fellow [Certificate] (04/08/23) |

|

|

|

|

M.S./Ph.D. in Electrical and Computer Engineering |

|

|

B.Eng. in Telecommunication Engineering |

|

|

|

|

|

PhD Research Intern |

|

|

Compiler Intern |

|

|

Exempt Tech Employee |

|

|

Research Assistant |

|

|

MITACS

Research Intern |

|

|

My research spans hardware design automation and compute‑in‑SRAM accelerators, accelerator design and programming languages, and energy‑efficient machine‑learning systems. |

|

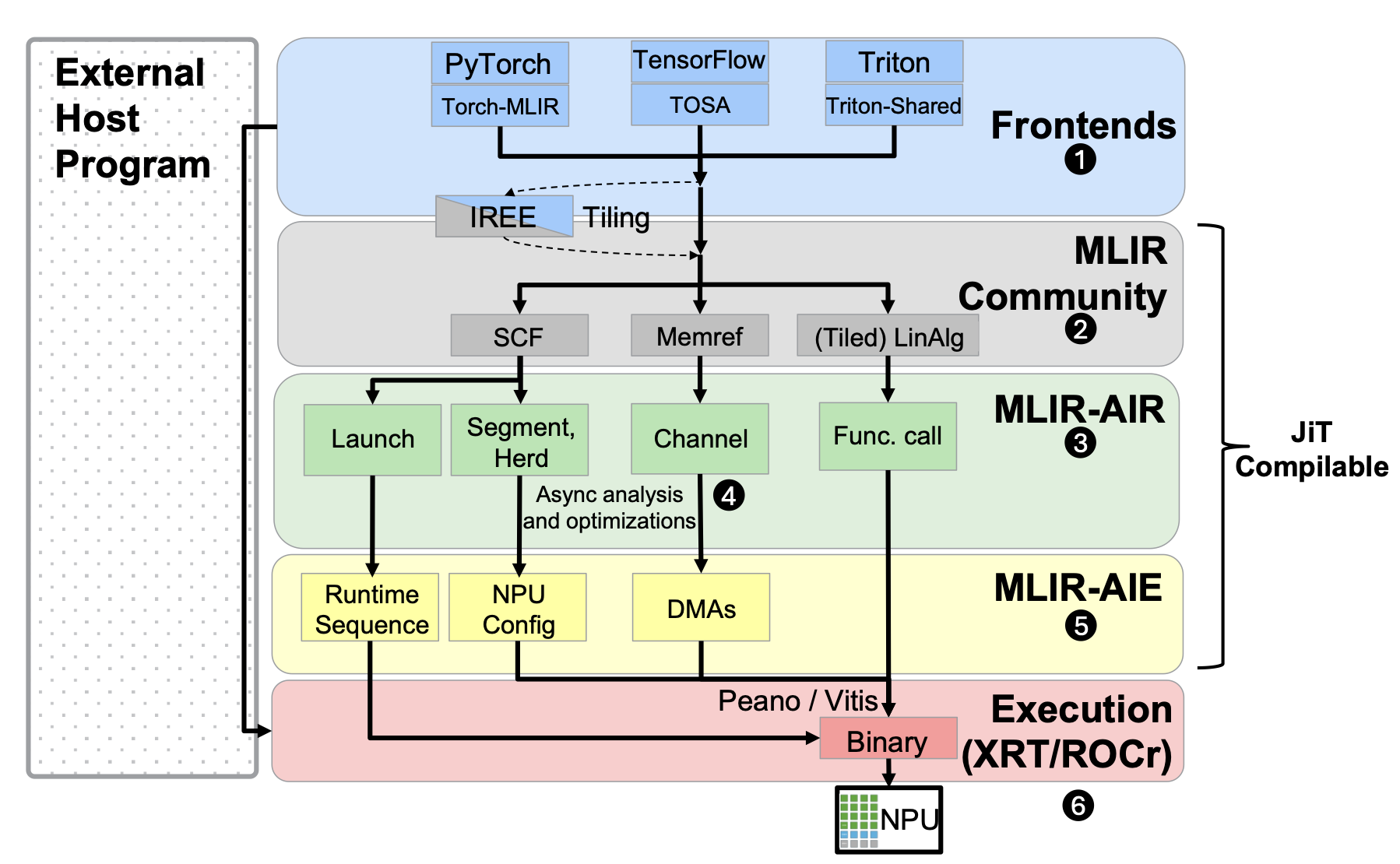

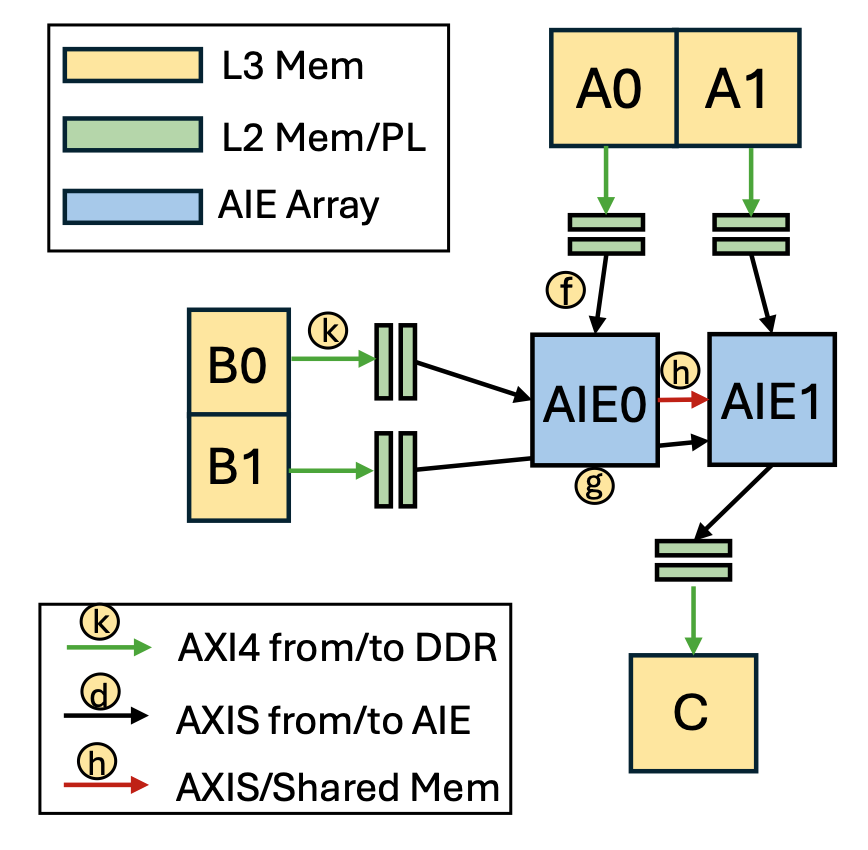

Erwei Wang, Samuel Bayliss, Andra Bisca, Zachary Blair, Sangeeta Chowdhary, Kristof Denolf, Jeff Fifield, Brandon Freiberger, Erika Hunhoff, Phil James-Roxby, Jack Lo, Joseph Melber, Stephen Neuendorffer, Eddie Richter, André Rösti, Javier Setoain, Gagandeep Singh, Endri Taka, Pranathi Vasireddy, Zhewen Yu, Niansong Zhang, Jinming Zhuang ACM Transactions on Reconfigurable Technology and Systems (TRETS) | To appear | preprintWe introduce MLIR-AIR, an open-source compiler stack built on MLIR that bridges the semantic gap between high-level workloads and fine-grained spatial architectures such as AMD's NPUs, achieving up to 78.7% compute efficiency on matrix multiplication. |

|

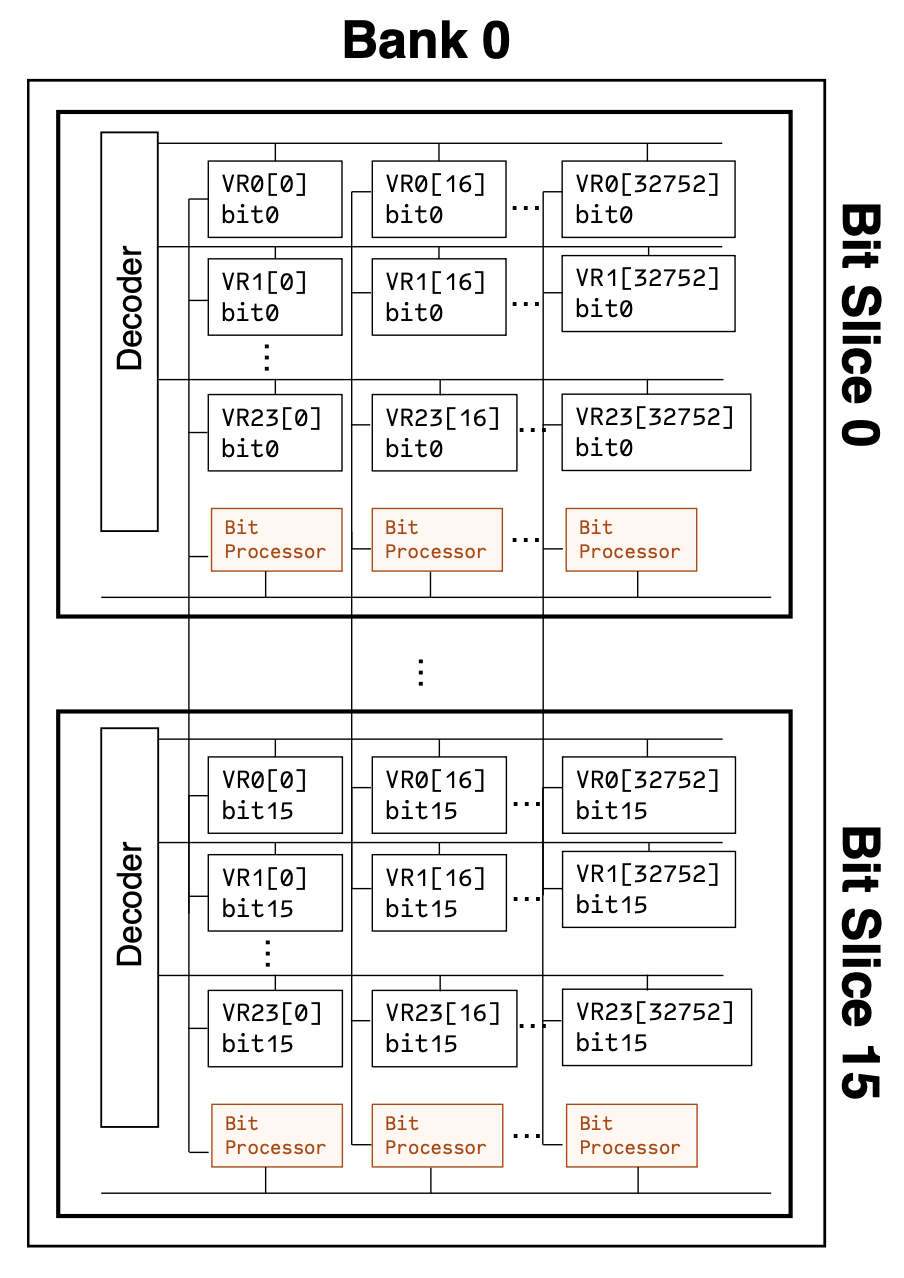

Niansong Zhang, Wenbo Zhu, Courtney Golden, Dan Ilan, Hongzheng Chen, Christopher Batten, Zhiru Zhang MICRO 2025 | paperThis paper characterizes a commercial compute-in-SRAM device using realistic workloads, proposes key data management optimizations, and demonstrates that it can match GPU-level performance on retrieval-augmented generation tasks while achieving over 46× energy savings. |

|

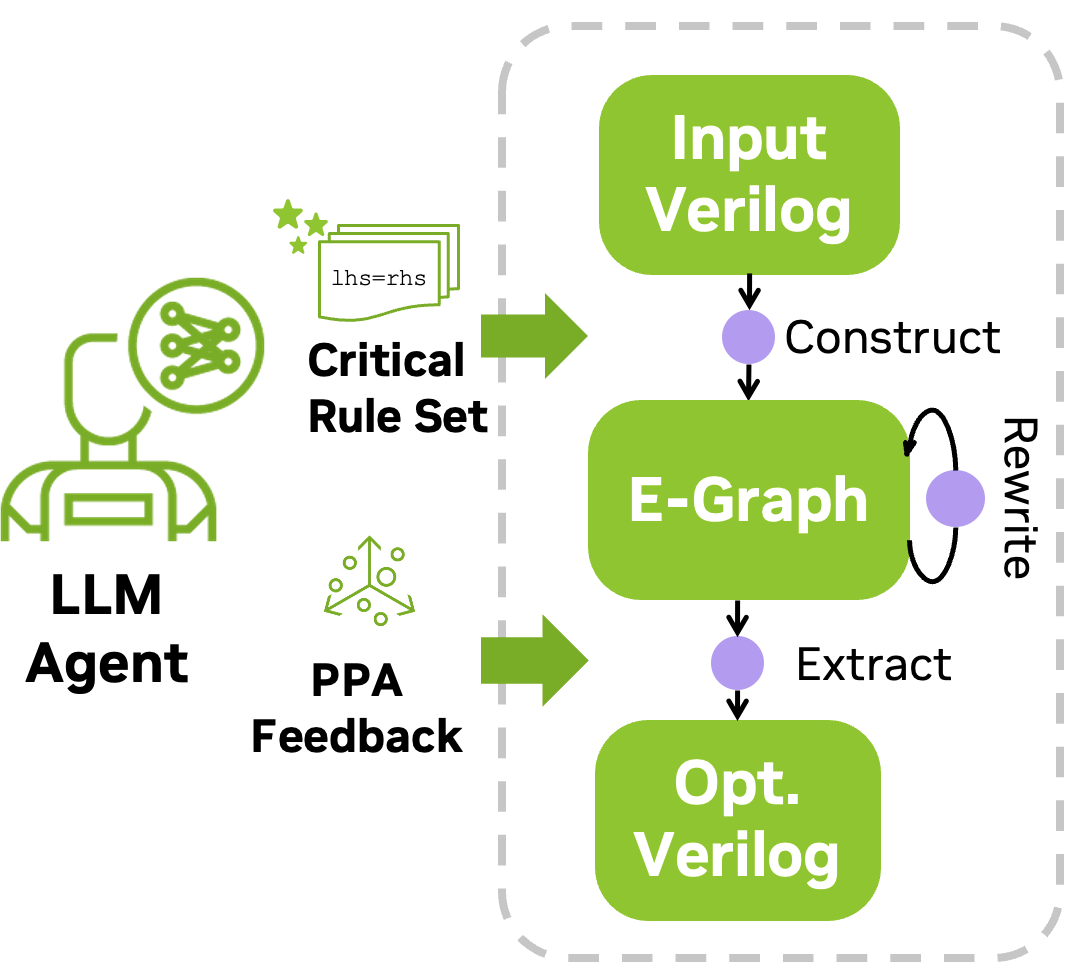

Niansong Zhang, Chenhui Deng, Johannes Maximilian Kuehn, Chia-Tung Ho, Cunxi Yu, Zhiru Zhang, Haoxing Ren MLCAD 2025 | paperWhy choose between smart and sound? ASPEN uses LLM-guided e-graph rewriting with real PPA feedback for RTL optimization. With 16.51% area and 6.65% delay improvements over prior methods, ASPEN shows you can have both—and it's fully automated. |

|

Niansong Zhang, Anthony Agnesina, Noor Shbat, Yuval Leader, Zhiru Zhang, Haoxing Ren ISPD 2025 | paper | code

🏆 We present Cypress, a GPU‑accelerated, VLSI‑inspired PCB placer that boosts routability by up to 5.9×, cuts track length by 19.7×, and runs up to 492× faster on new realistic benchmarks. |

|

Jinming Zhuang*, Shaojie Xiang*, Hongzheng Chen, Niansong Zhang, Zhuoping Yang, Tony Mao, Zhiru Zhang, Peipei Zhou * Equal Contribution FPGA 2025 | paper | code

🏅 We propose ARIES, a unified MLIR‑based compilation flow that abstracts task, tile, and instruction‑level parallelism across AMD AI Engine arrays (and optional FPGA fabric), boosting Versal VCK190 GEMM throughput by up to 1.6× over prior work. |

|

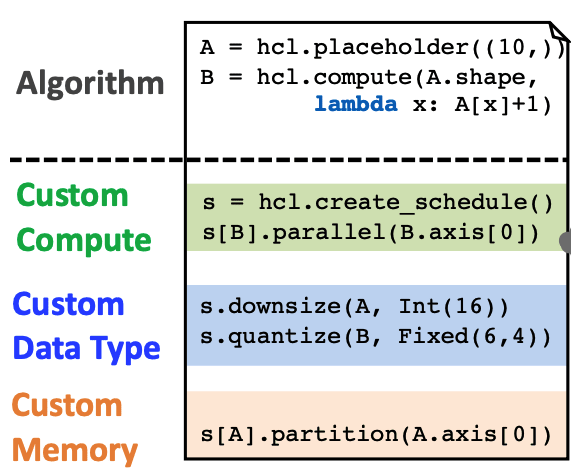

Hongzheng Chen* Niansong Zhang*, Shaojie Xiang, Zhichen Zeng, Mengjia Dai, Zhiru Zhang * Equal Contribution PLDI 2024 | paper | codeSpecialized hardware accelerators are vital for performance improvements, but current design languages are inadequate for complex accelerators. Allo, a new composable programming model, decouples hardware customizations from algorithms and outperforms existing languages in performance and productivity. |

|

Louis-Noël Pouchet, Emily Tucker, Niansong Zhang, Hongzheng Chen, Debjit Pal, Gaberiel Rodríguez, Zhiru Zhang FPGA 2024 | paper | code

🏆 We target the problem of efficiently checking the semantics equivalence between two programs written in C/C++ as a means to ensuring the correctness of the description provided to the HLS toolchain, by proving an optimized code version fully preserves the semantics of the unoptimized one. |

|

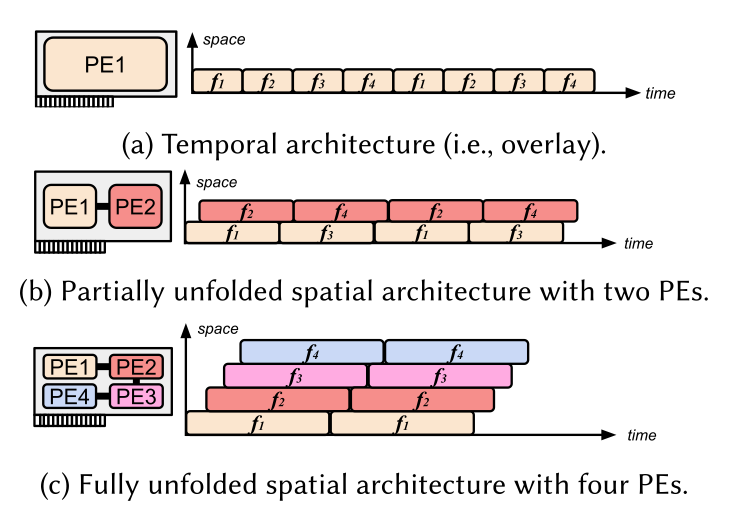

Hongzheng Chen, Jiahao Zhang, Yixiao Du, Shaojie Xiang, Zichao Yue, Niansong Zhang, Yaohui Cai, Zhiru Zhang ACM Transactions on Reconfigurable Technology and Systems (TRETS)Vol. 18, No 1, Article 5. We design a spatial FPGA accelerator for LLM inference that assigns each operator its own hardware block and connects them with on‑chip dataflow to cut memory traffic and latency. An analytical model guides parallelization and scaling, showing when FPGAs can outpace GPUs. |

|

Courtney Golden, Dan Ilan, Caroline Huang, Niansong Zhang, Zhiru Zhang, Christopher Batten IEEE Computer Architecture Letters | paperWe implement a virtual vector instruction set on a commercial Compute-in-SRAM device, and perform detailed instruction microbenchmarking to identify performance benefits and overheads. |

|

Shulin Zeng, Guohao Dai, Niansong Zhang, Xinhao Yang, Haoyu Zhang, Zhenhua Zhu, Huazhong Yang, Yu Wang IEEE Transactions on Computers | paper

🏅 This paper proposes a Design Space Exploration framework to jointly optimize heterogeneous multi-core architecture, layer scheduling, and compiler mapping for serving DNN workloads on cloud FPGAs. |

|

Debjit Pal, Yi-Hsiang Lai, Shaojie Xiang, Niansong Zhang, Hongzheng Chen, Jeremy Casas, Pasquale Cocchini, Zhenkun Yang, Jin Yang, Louis-Noël Pouchet, Zhiru Zhang Invited Paper, DAC 2022, paperWe show the advantages of the decoupled programming model and further discuss some of our recent efforts to enable a robust and viable verification solution in the future. |

|

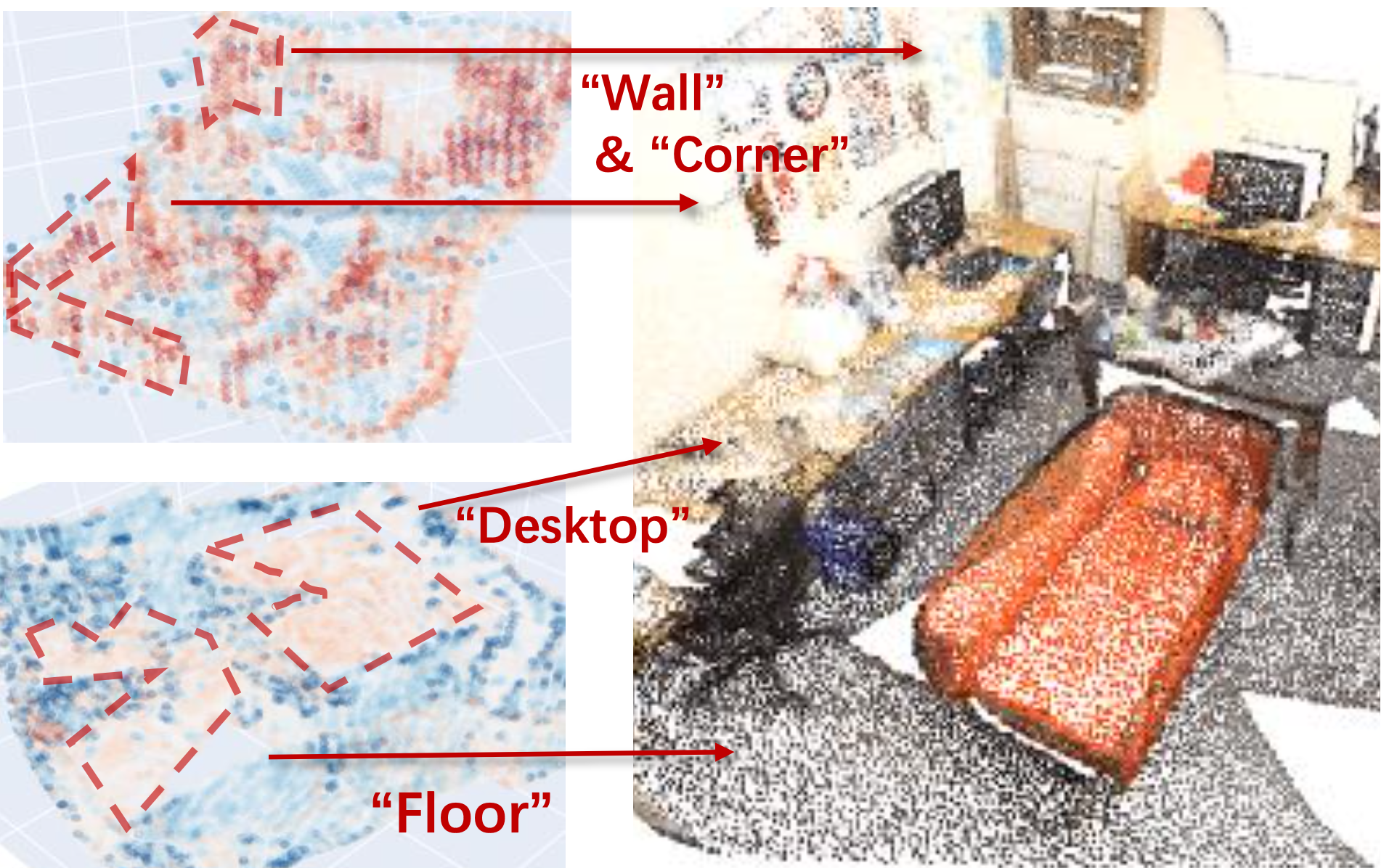

Tianchen Zhao, Niansong Zhang, Xuefei Ning, He Wang, Li Yi, Yu Wang CVPR 2022, paper | website | slides | poster | videoWe propose a flexible 3D Transformer on sparse voxels to address transformer's generalization issue. CodedVTR (Codebook-based Voxel TRansformer) decomposes attention space into linear combinations of learnable prototypes to regularize attention learning. We also propose geometry-aware self-attention to guide training with geometric pattern and voxel density. |

|

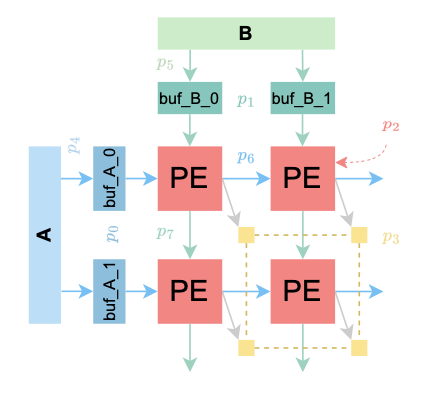

Shaojie Xiang, Yi-Hsiang Lai, Yuan Zhou, Hongzheng Chen, Niansong Zhang, Debjit Pal, Zhiru Zhang FPGA 2022, paper | codeWe propose an FPGA accelerator programming model that decouples the algorithm specification from optimizations related to orchestrating the placement of data across a customized memory hierarchy. |

|

Niansong Zhang, Xiang Chen, Nachiket Kapre

🏆 Volume 15, Issue 4, Article No.: 38, pp 1–23 We extend the previous work on RapidLayout with cross-SLR routing, placement transfer learning, and placement bootstrapping from a much smaller device to improve runtime and design quality. |

|

Xuefei Ning, Changcheng Tang, Wenshuo Li, Songyi Yang, Tianchen Zhao, Niansong Zhang, Tianyi Lu, Shuang Liang, Huazhong Yang, Yu Wang Arxiv Preprint, paper | codeWe build an open-source Python framework implementing various NAS algorithms in a modularized and extensible manner. |

|

Niansong Zhang, Xiang Chen, Nachiket Kapre FPL 2020, paper | code

🏅 We build a fast and high-performance evolutionary placer for FPGA-optimized hard block designs that targets high clock frequency such as 650+MHz. |

|

Hongzheng Chen*, Niansong Zhang*, Shaojie Xiang, Zhiru Zhang MLIR Open Design Meeting (08/11/2022) | video | slides | websiteCRISP Liaison Meeting (09/28/2022) | news | slides | website We decouple hardware customizations from the algorithm specifications at the IR level to: (1) provide a general platform for high-level DSLs, (2) boost performance and productivity, and (3) make customization verification scalable. |

|

Shulin Zeng, Guohao Dai, Niansong Zhang, Yu Wang MICRO 2021 ASCMD Workshop, paper | videoWe propose a multi-node and multi-core accelerator architecture and a decoupled compiler for cloud-backed INFerence-as-a-Service (INFaaS). |

|

Student Volunteer: FCCM’22 Reviewer: ICCAD 2022, 2023, TRETS, TCAS II |

|

[ECE 2300] Digital Logic and Computer Organization Head TA, Spring 2024 [ECE 5775] High-Level Digital Design Automation Part-time TA, Fall 2022 |

|

Best Paper Award at ISPD 2025 Best Paper Nomination at FPGA 2025 Best Paper Award at FPGA 2024 Best Paper Award for ACM TRETS in 2023 Best Paper Nomination (Michal Servit Award) at FPL 2020 DAC Young Fellow 2021 & 2023 Outstanding Bachelor Thesis Award | Sun Yat-sen University Mitacs Globalink Research Internship Award | Mitacs, Canada First-class Merit Scholarship x2 | Sun Yat-sen University Lin and Liu Foundation Scholarship | SEIT, Sun Yat-sen University |

|

Niansong Zhang, Haoxing Ren, Brucek Khailany, "Printed Circuit Board Component Placement." US Patent 24-0963US2, filed in Feburary 2025. Niansong Zhang, Songyi Yang, Shun Fu, Xiang Chen, "Industry Profile Geometric Dimension Automatic Measuring Method Based on Computer Vision Imaging." Chinese Patent CN201811539019.8A, filed on December 17, 2018, and issued on April 19, 2019. Niansong Zhang (at Novauto Technology), "A Pruning Method and Device of Multi-task Neural Network Models", Chinese Patent 202010805327.1, filed on August 12, 2020. |